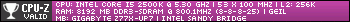

Post by osmiumoc on May 1, 2020 15:48:55 GMT -5

Heya you all, been a while since I posted as life kept me busy these days.

I just got to play around with modern Intel for the first time and I wondered what this per-Core stuff is all about. I see that Turbo-Boost 3.0 marks preferred cores just like Ryzen has them, which tend to be most suitable for the highest single core boost.

Now X299 boards allow for per-Core voltage adjustments when overclocking, so is it generally a good idea to take the time and 'bin' each core for voltage/frequency? The benefits I potentally see are lower heat-output of the whole die and less power consumption which in return might help stabilize an OC close to the edge of stability.

On the other hand its a lot of work to test for the individual cores on a HEDT-beast, and in the end your worst core will still end up a limiting factor. So should I not bother at all and just go 'old-school' Vcore override for all cores?

I'm also confused by the X299 dark bios, it tells you individual Core VIDs, but are the higher VIDs now the bad or the good cores? As higher VID might be because it needs more voltage because its bad OR it gets more voltage because its a designated Boost 3.0 core for the 5GHz bursts.

(Side-note, I often read that on ambient these chips are temp-limited and its difficult to hit a dangerous voltage before thermal throttle BUT I'm on water and approaching 1.4V @85°C which leaves more thermal headroom. Can I push further or am I testing my luck? This is just for a GPU-benchtable and will not run 24/7).

EDIT: What I have found out so far by my own testing: The cores with highest VID are the worst ones as binned by factory. For example Core 2 on my chip has 1.375 VID (for 4,9GHz), while others are at 1.325 VID. This Core 2 is also the LEAST favorite for Intel Boost 3.0 and appears on the bottom of priority list.

So far I also have found NO improvement in stability when taking the individual VIDs into account for voltage adjustments. Example: I set 1.35V all cores -> stable @4.9GHz. I lower the 'good' cores down to 1.34V and it gets unstable.

EDIT 2: I also encountered a potential bug on the X299 dark? When adjusting the Tjmax setting, the package temp sensor gets offset and does not change back when you return the value to the previous point. I lowered Tjmax to 99°C -> package temp 23°C idle. I raised it to 105°C -> package temp now 31°C idle. Core temps are untouched by this.

But when you switch back to 99°C Tjmax the package stays higher now. Voltages and clocks were unchanged during all this. I trust the new value a lot more, but I wonder why it wasn't like this in the first place...

I just got to play around with modern Intel for the first time and I wondered what this per-Core stuff is all about. I see that Turbo-Boost 3.0 marks preferred cores just like Ryzen has them, which tend to be most suitable for the highest single core boost.

Now X299 boards allow for per-Core voltage adjustments when overclocking, so is it generally a good idea to take the time and 'bin' each core for voltage/frequency? The benefits I potentally see are lower heat-output of the whole die and less power consumption which in return might help stabilize an OC close to the edge of stability.

On the other hand its a lot of work to test for the individual cores on a HEDT-beast, and in the end your worst core will still end up a limiting factor. So should I not bother at all and just go 'old-school' Vcore override for all cores?

I'm also confused by the X299 dark bios, it tells you individual Core VIDs, but are the higher VIDs now the bad or the good cores? As higher VID might be because it needs more voltage because its bad OR it gets more voltage because its a designated Boost 3.0 core for the 5GHz bursts.

(Side-note, I often read that on ambient these chips are temp-limited and its difficult to hit a dangerous voltage before thermal throttle BUT I'm on water and approaching 1.4V @85°C which leaves more thermal headroom. Can I push further or am I testing my luck? This is just for a GPU-benchtable and will not run 24/7).

EDIT: What I have found out so far by my own testing: The cores with highest VID are the worst ones as binned by factory. For example Core 2 on my chip has 1.375 VID (for 4,9GHz), while others are at 1.325 VID. This Core 2 is also the LEAST favorite for Intel Boost 3.0 and appears on the bottom of priority list.

So far I also have found NO improvement in stability when taking the individual VIDs into account for voltage adjustments. Example: I set 1.35V all cores -> stable @4.9GHz. I lower the 'good' cores down to 1.34V and it gets unstable.

EDIT 2: I also encountered a potential bug on the X299 dark? When adjusting the Tjmax setting, the package temp sensor gets offset and does not change back when you return the value to the previous point. I lowered Tjmax to 99°C -> package temp 23°C idle. I raised it to 105°C -> package temp now 31°C idle. Core temps are untouched by this.

But when you switch back to 99°C Tjmax the package stays higher now. Voltages and clocks were unchanged during all this. I trust the new value a lot more, but I wonder why it wasn't like this in the first place...